Embarking on a voyage through the seas of NLP, you’ll inevitably bump into a gigantic iceberg – Large Language Models or LLMs. Yet, there’s more beneath the surface. Beyond the general use of these behemoths for text generation or summarization lies a less-talked-about concept: LLM Embeddings. This article unravels the mystique around LLM Embeddings, contrasts it with fine-tuning strategies, scrutinizes the workings of LLM vector embeddings, and even delves into open-source options. A captivating read, indeed, for anyone engrossed in language technologies.

[…]

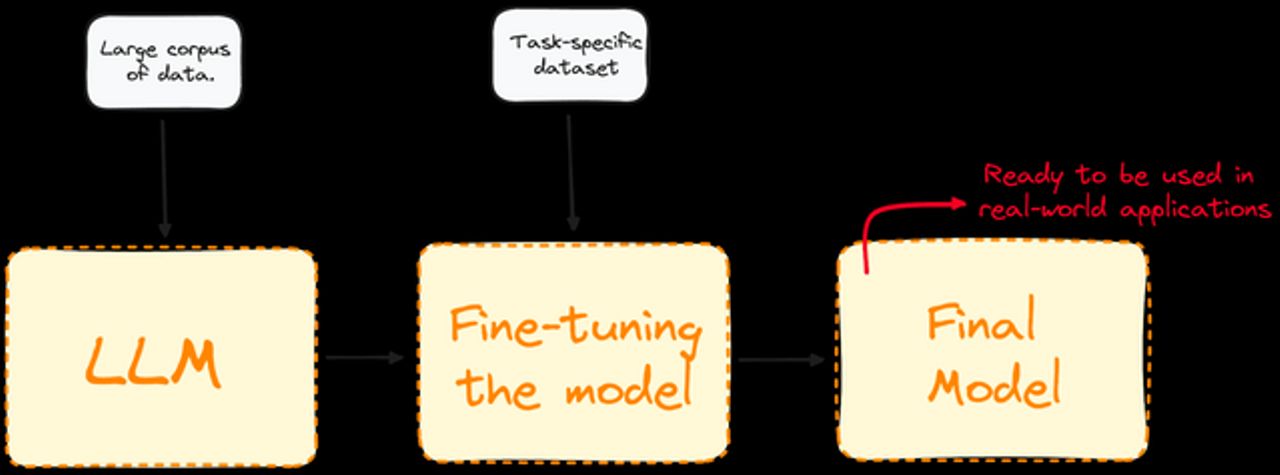

Imagine trying to decode a language without ever having heard it before. That’s sort of what happens when you’re brand new to LLM embeddings. This is where fine-tuning and embedding come into play. Fine-tuning is like getting bespoke, tailor-made clothing; it molds the pre-trained LLM specifically to your tasks. On the flip side, embedding is more universal and less customized. It’s akin to off-the-rack clothing – useful but not exactly molded to you. So, when deciding between LLM fine-tuning vs. embedding, think about the level of customization you require.

[…]