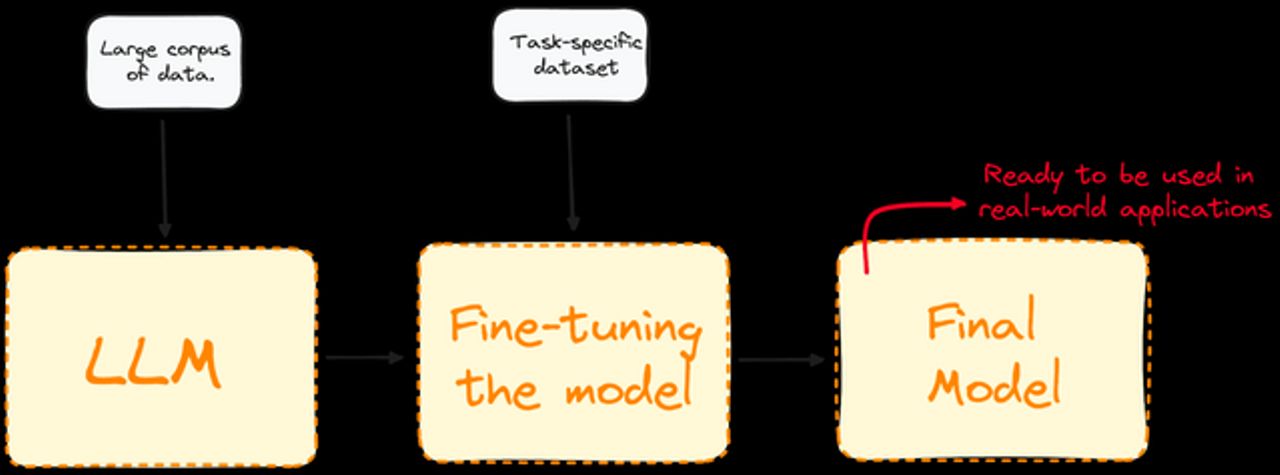

As you go deeper down the rabbit hole building LLM-based applications, you may find that you need to root your LLM responses in your source data. Fine-tuning an LLM with your custom data may get you a generative AI model that understands your particular domain, but it may still be subject to inaccuracies and hallucinations. This has led a lot of organizations to look into retrieval-augmented generation (RAG) to ground LLM responses in specific data and back them up with sources.

[…]

A slightly more complicated method pays attention to the content itself, albeit in a naive way. Context-aware chunking splits documents based on punctuation like periods, commas, or paragraph breaks or use markdown or HTML tags if your content contains them. Most text contains these sort of semantic markers that indicate what characters make up a meaningful chunk, so using them makes a lot of sense. You can recursively chunk documents into smaller, overlapping pieces, so that a chapter gets vectorized and linked, but so does each page, paragraph, and sentence it contains.

[…]