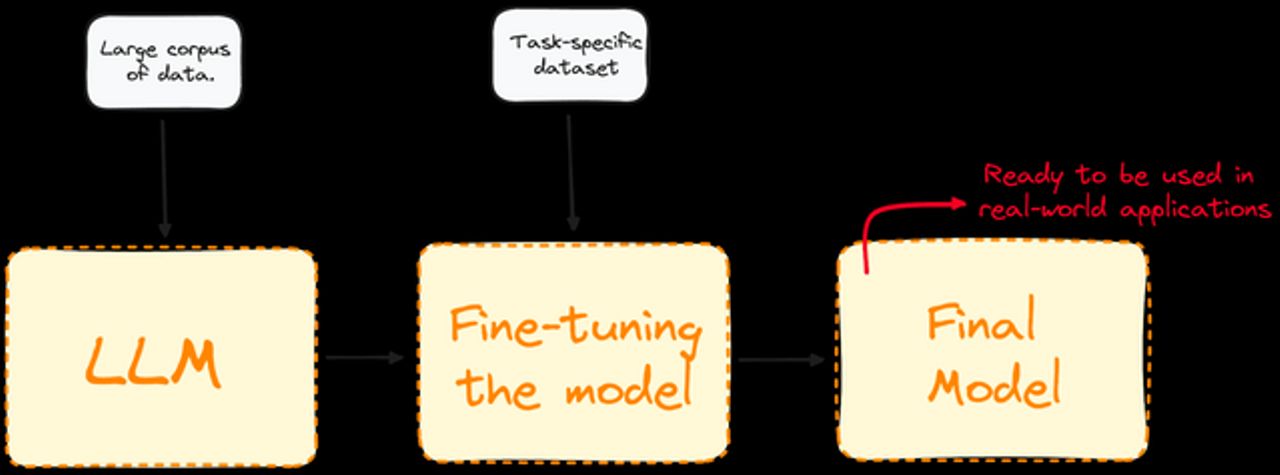

In my previous post, I explored how to develop a Retrieval-Augmented Generation (RAG) application by leveraging a locally-run Large Language Model (LLM) through Ollama and Langchain. This time, I will demonstrate building the same RAG application using a different tool, LlamaIndex. All source codes related to this post have been published on GitLab. Please clone the repository to follow along with the post.

LlamaIndex is a comprehensive framework designed for constructing production-level Retrieval-Augmented Generation (RAG) applications. It provides a user-friendly and flexible interface that allows developers to connect LLMs with external data sources, such as private databases, documents in formats like PDFs and PowerPoints, and applications including Notion and Slack, as well as databases like PostgreSQL and MongoDB.